A Deep Dive into Pandas: Mastering Data Analysis with Python

Introduction

Pandas, a Python library built on top of NumPy, has become an indispensable tool for data scientists and analysts. Its powerful data structures, Series and DataFrame, provide efficient ways to manipulate, analyze, and explore large datasets. This comprehensive guide will delve into the intricacies of Pandas, covering everything from basic operations to advanced techniques.

Understanding Pandas Data Structures

Series

A Series is a one-dimensional labeled array capable of holding any data type (integers, floats, strings, objects, etc.).

import pandas as pd

data = [1, 2, 3, 4, 5]

series = pd.Series(data)

print(series)

DataFrame

A DataFrame is a two-dimensional labeled data structure with columns of potentially different types. It is analogous to a spreadsheet or SQL table.

data = {'column1': [1, 2, 3], 'column2': ['a', 'b', 'c']}

df = pd.DataFrame(data)

print(df)

Data Ingestion and Export

Pandas offers versatile functions for reading data from various file formats and exporting results:

- Reading data:

pd.read_csv(): Read data from CSV filespd.read_excel(): Read data from Excel filespd.read_json(): Read data from JSON filespd.read_sql(): Read data from SQL databases

- Exporting data:

df.to_csv(): Write DataFrame to CSVdf.to_excel(): Write DataFrame to Exceldf.to_json(): Write DataFrame to JSONdf.to_sql(): Write DataFrame to SQL database

Data Exploration and Cleaning

- Basic exploration:

df.head(): View the first few rowsdf.tail(): View the last few rowsdf.shape: Get the number of rows and columnsdf.info(): Get column data types and non-null countsdf.describe(): Generate descriptive statistics

- Handling missing values:

df.isnull(): Check for missing valuesdf.fillna(): Fill missing valuesdf.dropna(): Drop rows or columns with missing values

- Data types:

df.dtypes: Get data types of columnspd.to_numeric(),pd.to_datetime(): Convert data types

- Duplicates:

df.duplicated(): Check for duplicatesdf.drop_duplicates(): Remove duplicates

Data Manipulation

- Selection and indexing:

df[column_name]: Select a columndf.loc[row_label]: Select rows by labeldf.iloc[row_index]: Select rows by integer indexdf.at[row_label, column_label]: Select a single valuedf.iat[row_index, column_index]: Select a single value

- Filtering:

- Boolean indexing

df.query()

- Sorting:

df.sort_values(): Sort by column values

- Grouping and aggregation:

df.groupby(): Group data by one or more columns- Aggregation functions:

mean,sum,count,min,max,std, etc.

- Concatenation and merging:

pd.concat(): Concatenate DataFramespd.merge(): Merge DataFrames based on common columns

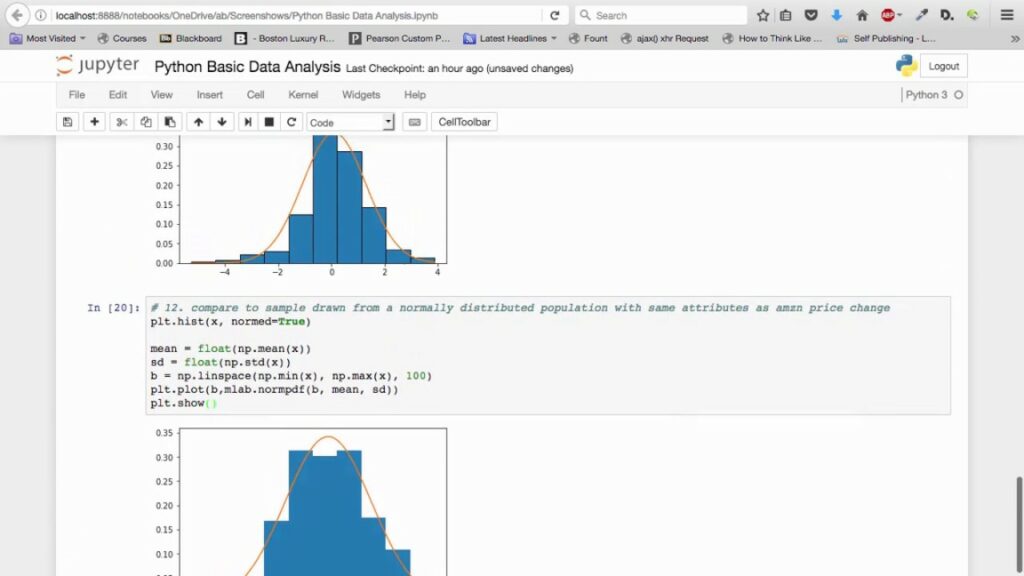

Data Visualization

Pandas integrates well with plotting libraries like Matplotlib and Seaborn:

import matplotlib.pyplot as plt

import seaborn as sns

# Example:

df.plot(kind='bar')

plt.show()

Time Series Analysis

Pandas provides powerful tools for working with time series data:

pd.to_datetime(): Convert strings to datetime objects- Resampling:

df.resample(),df.asfreq() - Shifting:

df.shift(),df.tshift() - Rolling windows:

df.rolling()

Advanced Topics

- Categorical data: Using

pd.Categoricalfor efficient handling of categorical variables - High-performance computing: Leveraging Pandas with NumPy and libraries like Dask for large datasets

- Machine learning integration: Preparing data for machine learning models using Pandas

- Financial data analysis: Applying Pandas to financial datasets for analysis and modeling

Conclusion

Pandas is a versatile and efficient tool for data analysis in Python. By mastering its core concepts and functionalities, you can effectively explore, clean, manipulate, and visualize data to extract valuable insights. This guide has provided a comprehensive overview, but there is always more to learn. Experiment with different datasets and explore advanced techniques to enhance your data analysis skills.